Introduction

In the field of artificial intelligence (AI), transparency and understanding of machine learning (ML) models have become an important area of exploration. One of the main challenges of AI today is that many machine learning models are considered “black boxes”, meaning that they are complex and opaque, making it difficult to understand how they work or why they make certain decisions.

This necessity encouraged the development of a product designed to demystify AI in practice, making them accessible and justifiable. Here, we explore the journey from conceptualization to the successful implementation of this product, emphasizing the challenges faced and how they were overcome through innovative Explainable AI (XAI) techniques.

Product Design

“BodhiX”, as we call it, is an XAI platform developed by Cloudcraftz to improve the transparency and interpretability of AI models. The product was designed with a clear objective: to provide comprehensive explanations of ML models by analyzing the data on which they were trained and testing the models on different grounds.

BodhiX hosts the key functionalities which are necessary to obtain model explanations.

BodhiX is divided into three sections:

- Global Explanation

- Local Explanation

- Counterfactual

Global Explanations

Global explanation refers to the act of comprehending a model’s general behaviour and decision-making process rather than concentrating on individual forecasts. Global explanations give a high-level overview of how the model operates, which can assist stakeholders in understanding the model’s strengths and limits and instilling faith in its judgements.

BodhiX hosts four methods for Global Explanations:

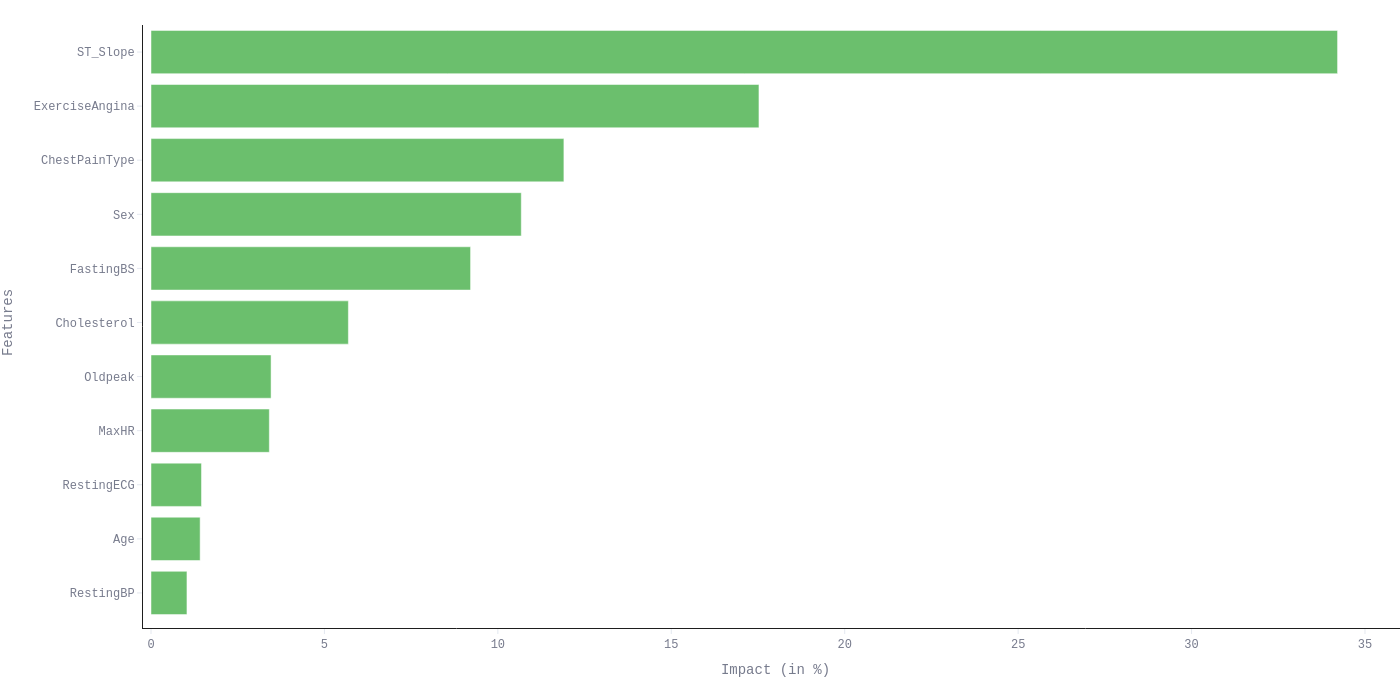

Feature Impact Analysis refers to the process of determining how particular features or variables in a dataset contribute to the output of a model. This gives a measure of each feature’s contribution to the model’s output while also accounting for feature interaction.

The above figure shows a sample Feature Impact Analysis on a model trained to predict heart disease. Analysis tells us that the feature ST_Slope has the greatest influence, accounting for 34% of the entire impact of the model. Similarly, Exercise Angina is the model’s second most influential feature, accounting for 17.5% of its overall influence. Resting BP, Resting ECG, and Age, on the other hand, have a negligible influence on the model’s performance.

Feature Impact Analysis can assist stakeholders in gaining insights into a model’s decision-making process and building trust in its predictions.

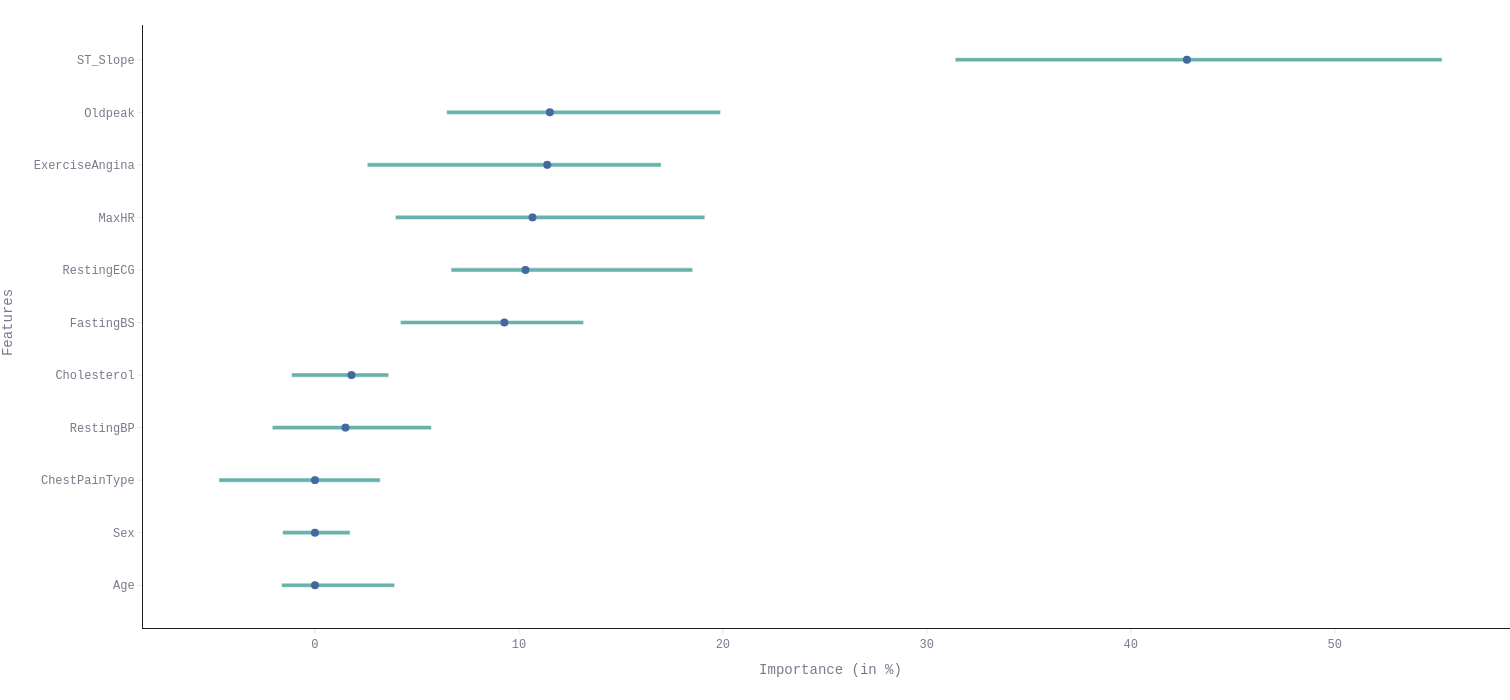

Permutation Feature Importance is used to assess the value of individual features in a ML model. It operates by permuting the values of a single feature in a dataset at random and then assessing the associated loss in the model performance.

This figure presents one such chart using the same heart disease prediction model. Here ST_slope feature results in a significant 42% loss in model accuracy, confirming the findings of the feature impact study. Exercise Angina has been found to be the third most influential characteristic, generating a 12% decrease in accuracy when its values were jumbled. This is how the relative significance of each feature can be visualised from another perspective.

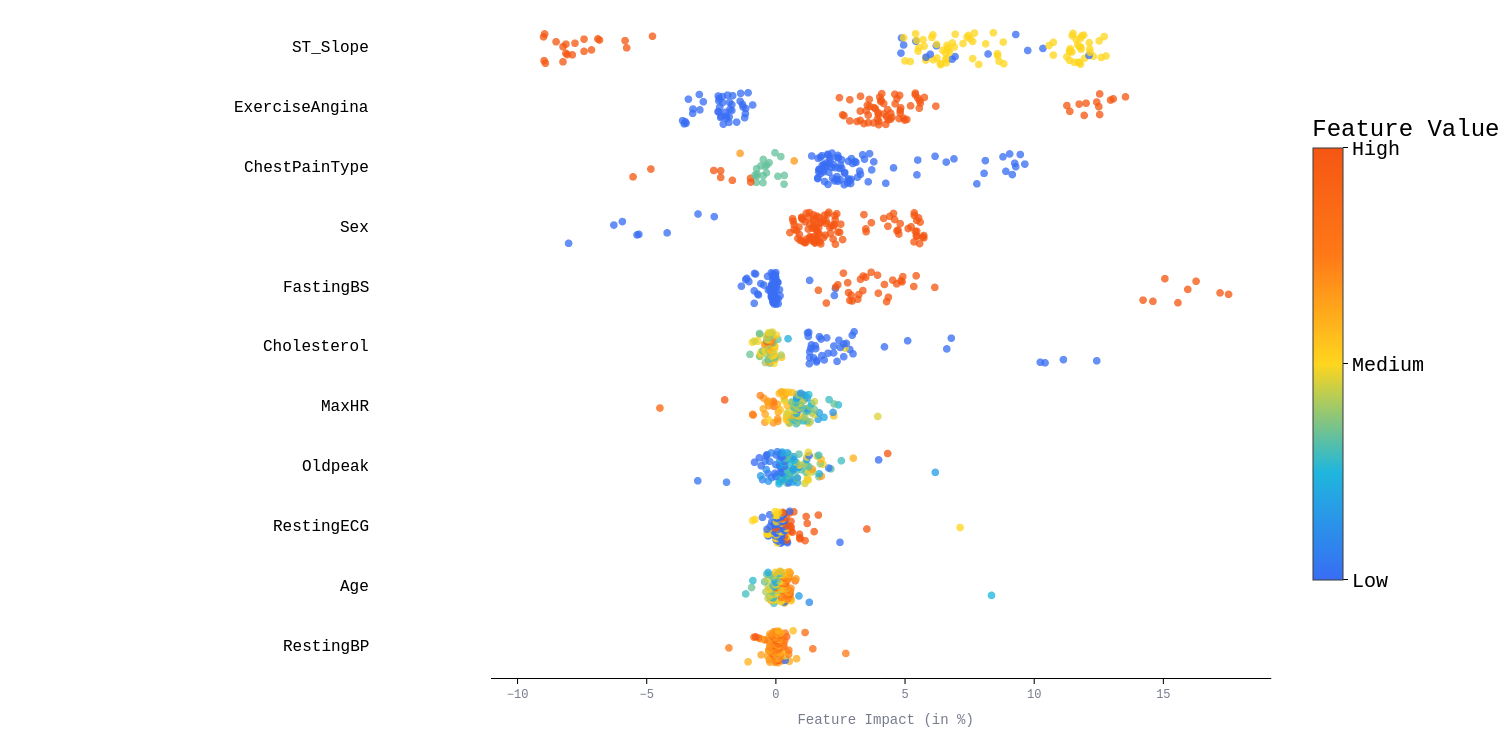

Global Summary is a high level overview of the behaviour and decision making process of ML models highlighting the direction of influence for distinct features.

In the above figure we can see that when the value of ST_slope is high, suggesting more activity, the model predicts a decreased risk of heart disease. However, the model considers very low cholesterol to be dangerous, which may connect with overexercising and raise the risk of heart attack. So, the Global Summary analysis may assists the stake holders in determining which features and their values are most important in the models’s decision making process.

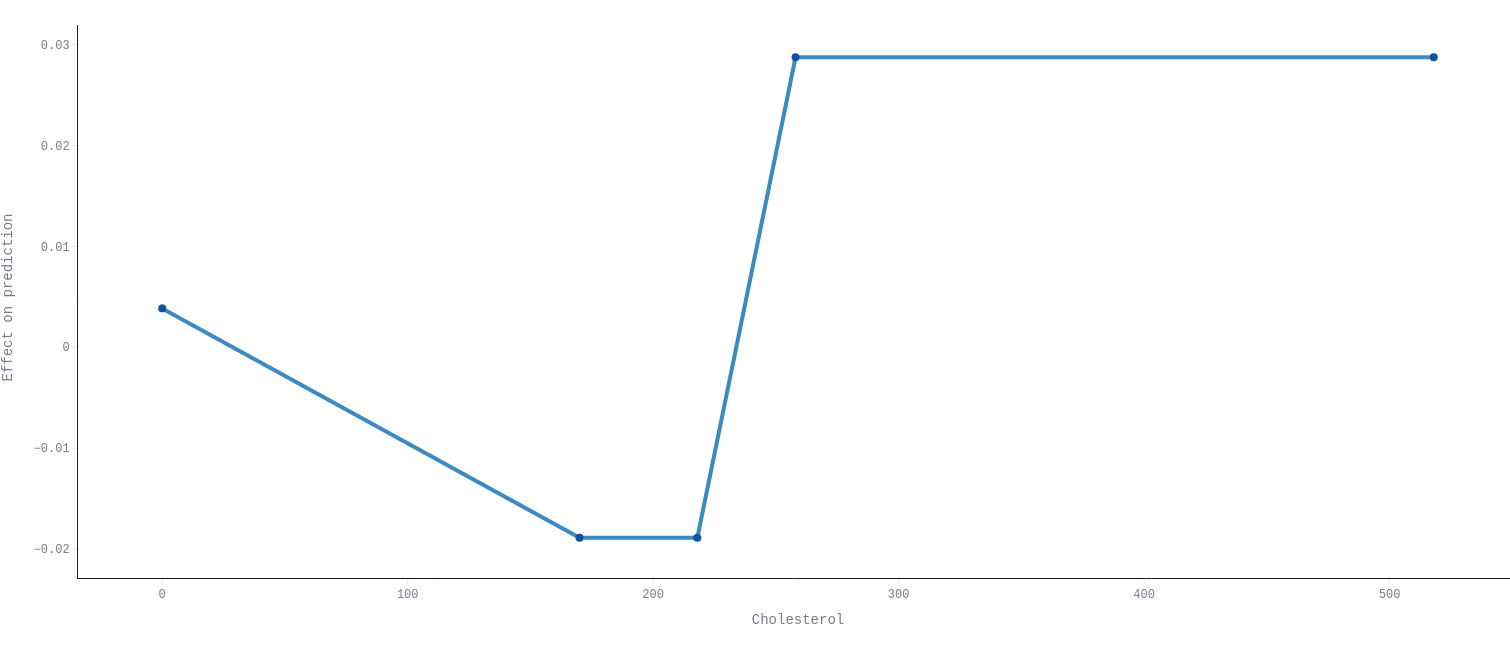

Accumulated Local Effect (ALE) describes how single input characteristic influences the output of a model.

Local Explanation

Local Explanation is a strategy used to explain how a machine learning model generated a specific instance or prediction. In contrast to Global Explanation, which examines a model’s behaviour across a full dataset, Local Explanation focuses on a single instance of input data and explains how the model produced its prediction for that specific instance. The Local Explanation approach can help you understand how a model’s decision-making process works for a specific input instance. Local Explanation is very effective for increasing trust in the model’s predictions and revealing situations where the model may be producing errors or biases.

Counterfactuals

Counterfactuals are a technique used in Explainable AI to demonstrate how a machine learning model would have predicted a different outcome for a certain input instance if some of the input attributes had different values. In other words, counterfactuals are alternative situations or explanations that aid in explaining the model’s behaviour. Stakeholders may obtain insights into how the model’s decision making process works, identify instances where the model may be making errors or biases in its predictions, and enhance the model’s performance by creating counterfactuals. Counterfactuals are an effective strategy for increasing trust in machine learning models and increasing transparency.

How We Reached Here

The journey was not without its challenges but our approach was to take up the challenges one by one conquer them. We started with simplest solutions first and gradually built on top of that and since there was no proven path in this field which can be tagged right or wrong we were all our own. So, finally combining all of these solutions we found our best path.

Here are few of the challenges to mention:

- Complexity in Integration: Integrating various types of ML models and handling diverse datasets presented significant challenges, necessitating a flexible and robust system architecture.

- Balancing Local vs. Global Explainability: Providing both detailed and overview explanations required careful design to ensure both types of insight were accurate and informative.

- User Interface Design: Developing an intuitive user interface that could accommodate complex data visualizations and allow users to easily perform what-if analyses was another critical challenge.

To tackle these issues, the team employed a series of strategic and technical solutions:

- Modular Architecture: The system was built with a modular architecture, allowing for easy integration of different models and data types, and scalability.

- Advanced Data Visualization: To aid in understanding, sophisticated visualization tools were implemented, making the interpretation of complex concepts like SHAP values and what-if scenarios clear and actionable.

- Continuous Feedback Loop: User feedback was integral to refining the product. Continuous testing and iteration helped in smoothing out complexities in the user interface.

Conclusion

Finally, all efforts we put together into has produced a product that not only meets the initial goals but also become an everyday tool for our Data Scientists. It has been used to explain models from different sectors from finance to healthcare, where understanding of AI decisions is critical.

This product is proving the right tool to peep into the black box of ML models and make them transparent and understandable. As AI continues to evolve and integrate into every aspect of our lives, the importance of products like this will only grow.