Hello Readers! If you are a beginner, this post will get you up to speed with NLP. I will share with you my annotations that helped me learn the basics and I still use for quick reference.

Let’s start with Natural Language Processing.

Note: Please do let me know if you find any part of this post as inappropriate or misleading.

Session 1 — Basics of Text Preprocessing

Tokenization

Definition — Breaking sentences into words.

Stopwords

Definition — Removing those words from the sentences which are repetitive and do not contribute much to the understanding of the actual meaning.

Example : I am going to go to his house. Here the word "to" is being repeated twice though it is necessary to make the sentence grammatically right, but not necessary to express the meaning of the sentence , the sentence would still convey the same message without it.

Stemming

Definition — Finding the base of each word in the sentence after step 2 that is after removing stopwords.

Example : There are two words in the sentence, history, historical . So the base word that is common in all of them is histor, so that becomes the stem or base . At times the base word comes out to be such that it becomes meaningless, this is a disadvantage of Stemming. Like histor is meaningless.

Example 2: going, goes are the two words. Base word - go . (Here the base word is meaningful)

Lemmatization

Definition — Same as stemming but it overcomes the problem of stemming that is it creates base words that are meaningful.

Example: Here historical and history gets converted into base word history.

Only disadvantage is that it is slow.

Uses of Stemming

- Spam Classification

- Review Classification

Uses of Lemmatization

- Text Summarization

- Language Translation

- Chatbots

Stemming is rule based whereas Lemmatization is Dictionary based .

Session 2 — Basics of Text Preprocessing

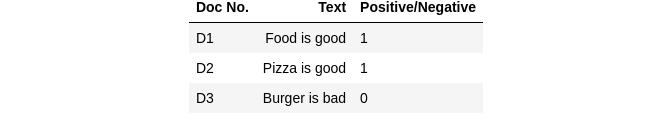

Say we are doing Sentiment Analysis :

- Here all this data points D1, D2, D3 taken together forms the corpus, it is like the entire paragraph that includes all sentences.

- Here each data point D1, D2..so on are all Documents, they are the same as sentences.

- The number of unique words in the entire corpus is known as the Vocabulary.

- Collection of all words in the corpus(may not be unique) is known as Words.

One Hot Encoded

Say there is a corpus = [‘man is good’, ‘cat is bad’, ‘dog is good’]

Here the vocabulary is = 6 (only six unique words - man, is, good, cat, bad, dog).

So for D1 i.e. ‘man is good’, the one-hot-encoded format becomes:

D1 = [[1, 0, 0], [0, 1, 0], [0, 0, 1]]

In D1, the sentence is man is good, there we take one word from the corpus at a time and assign 1 to the position of the same word if it exists in document and rest as 0. So, in D1 first word is man so we make first position as 1 and rest 2 positions 0. Similarly, we also do the same for all the other words in the document.

Similarly do it for all documents.

Advantages

- Easy to implement

- Intuitive

Disadvantage

- Sparse Matrix

- Out of Vocabulary (if sentence sizes are not fixed), then the model is not trainable. Say Document 1 had 4 words and Document 2 had 3 words and Document 3 also had 3 words. Then as we know model is not trainable as in ML if the features are not fixed, each data point must have equal number of features. Like in this corpus each and every sentence has 3 words each.

- Semantic meaning between words are not captured. (Only a particular word is marked 1 and rest are 0, so the connection between them is unclear.)

Bag of Words

Say there are 3 sentences:

- D1 — He is a good boy

- D2 — She is a good girl

- D3 — Boy and Girl are good

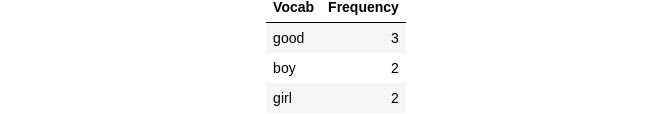

The first task is to remove the stopwords such as He, She, is, a, and so on from each sentence. After removing the stopwords see the vocabulary and count their frequency.

After removing stopwords

- D1 becomes — good boy

- D2 becomes — good girl

- D3 becomes — boy girl good

Note — Always remember to lower the cases of all words before removing the stopwords .

Vocabulary — 3 (good, boy, girl)

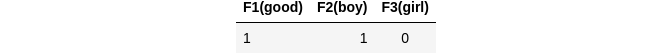

So now the vocabulary becomes our features. good is F1, boy is F2 and girl is F3.

Remember feature number depends on decreasing order of frequency, one with the highest frequency is called feature 1 and so on.

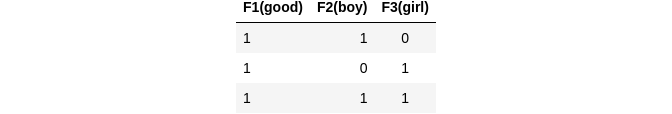

Now we create the matrix.

Explanation of Above Matrix:

First has F1 as 1 F2 as 1 and F3 as 0, because sentence 1 has only two words good and boy similarly second sentence has good girl so good i.e. F1 is 1 and girl i.e. F3 is 1 rest 0.

But say a situation arises where the sentence is like : good boy good, there are 2 good‘s so in that case just increase the count in the matrix like this .

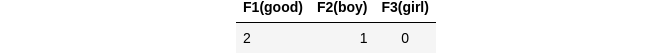

But there is an option in the bag of words, i.e. Binary BOW which means no matter how many times a word comes in a sentence we just keep it as one .

For Example

Sentence is — good boy good. The bow for this sentence is:

Advantages

- Simple and Intuitive

Disadvantages

- Sparsity is not fixed (to some extent fixed)

- Ordering is changed because the matrix is constructed in such a way that the features with highest frequency is given the first column and so on, so when we take one sentence at a time to mark in the feature column , the position of that feature and the position of the word in the sentence may not match.

- Not able to capture semantic meaning.

How to solve this problem of Semantic Meaning ?

- Ngrams — N-grams are continuous sequences of words or symbols or tokens in a document. In technical terms, they can be defined as the neighbouring sequences of items in a document.

N here denotes how many words we consider to create the gram . Say example:

Sentence — “I reside in Kolkata”

Bigram = ['I reside', 'reside in', 'in Kolkata']

Trigram = ['I reside in', 'reside in Kolkata']

Just like a moving window with a N value as window size. Here the combinations are continuous , it cannot be like reside kolkata or I Kolkata and so on . Only continuous combinations are taken.

Then we use these combinations as additional features in addition to the vocabulary features we were already using.

Note — create N-grams always by taking the entire corpus.

Features Now — |F1(good)|F2(boy)|F3(girl)|F4(good boy)|F5(good girl)|F6(boy girl)

Imports

import nltk

import warnings

warnings.filterwarnings('ignore')

import re

from nltk.stem import PorterStemmer, WordNetLemmatizer

from nltk.corpus import stopwords

# Used for creating Bag of Words.

from sklearn.feature_extraction.text import CountVectorizer

# A tokenizing algorithm that tokenizes paragraphs using an unsupervised algorithm.

nltk.download('punkt')

# Stopwords in English

nltk.download('stopwords')

nltk.download('wordnet')

nltk.download('omw-1.4')

[nltk_data] Downloading package punkt to /home/neelakash/nltk_data...

[nltk_data] Package punkt is already up-to-date!

[nltk_data] Downloading package stopwords to

[nltk_data] /home/neelakash/nltk_data...

[nltk_data] Package stopwords is already up-to-date!

[nltk_data] Downloading package wordnet to

[nltk_data] /home/neelakash/nltk_data...

[nltk_data] Package wordnet is already up-to-date!

[nltk_data] Downloading package omw-1.4 to

[nltk_data] /home/neelakash/nltk_data...

[nltk_data] Package omw-1.4 is already up-to-date!

True

Codes

paragraph = " In finance, stock (also capital stock) consists of the shares of which ownership of a corporation or company is divided.[1] (Especially in American English, the word 'stocks' is also used to refer to shares.)[1][2] A single share of the stock means fractional ownership of the corporation in proportion to the total number of shares.......A 'dividend king' is a stock which has had an increasing or constant dividend yield for over 50 successive years."

# output

paragraph

" In finance, stock (also capital stock) consists of the shares of which ownership of a corporation or company is divided.[1] (Especially in American English, the word 'stocks' is also used to refer to shares.)[1][2] A single share of the stock means fractional ownership of the corporation in proportion to the total number of shares...... A 'dividend king' is a stock which has had an increasing or constant dividend yield for over 50 successive years."

Next we would be tokenizing and cleaning the above text. Thereafter, we apply Stemming to find out the base words.

# Step 1 - Tokenize

sentences = nltk.sent_tokenize(paragraph, language='english')

# output

sentences[0]

[' In finance, stock (also capital stock) consists of the shares of which ownership of a corporation or company is divided.']

# sentences[-1]

["A 'dividend king' is a stock which has had an increasing or constant dividend yield for over 50 successive years."]

# Step 2 - Cleaning each sentence

corpus = []

for i in range(len(sentences)):

# regex to replace all characters other than a-z , A-Z and 0-9 with a blank string .

txt = re.sub('[^a-zA-Z0-9]', ' ', sentences[i])

# convert all to lowercase to maintain uniformity

txt = txt.lower()

corpus.append(txt)

# Step 3 - Stemming and Lemmatization

stemming = PorterStemmer()

for i in corpus:

# Tokenizing the sentences further into single words.

words = nltk.word_tokenize(i)

for word in words:

if word not in set(stopwords.words('english')):

print(stemming.stem(word))

# output

financ

stock

also

capit

stock

consist

share

ownership

corpor

compani

...

...

success

year

We will also see, what is the effect when we try to apply Lemmatization on the above sentences.

lemma = WordNetLemmatizer()

for i in corpus:

# Tokenizing the sentences further into single words.

words = nltk.word_tokenize(i)

for word in words:

if word not in set(stopwords.words('english')):

print(lemma.lemmatize(word))

# output

finance

stock

also

capital

stock

consists

share

ownership

corporation

company

....

successive

year

Now we will see how to create a bag of words.

# Before creating BOW we have to remove all stopwords and also lemmatize them.

new_corpus = []

for sen in corpus:

j = sen.strip()

j = j.split()

rev = [lemma.lemmatize(word) for word in j if word not in set(stopwords.words('english'))]

rev = ' '.join(rev)

new_corpus.append(rev)

# Creating BOW

bow = CountVectorizer()

X = bow.fit_transform(new_corpus)

# use binary = True inside CountVectorizer(), if you want a binary BOW .

# use n_grams_range = (3, 3) if you want trigrams.

# use n_grams_range = (2, 3) if you want both tri and bi-grams.

# vocabulary_ is a dictionary where keys are terms and values are indices in the feature matrix.

# output

bow.vocabulary_

{'finance': 52,

'stock': 133,

'also': 2,

'capital': 13,

'consists': 23,

'share': 125,

'ownership': 98,

'corporation': 25,

'company': 21,

.....

'year': 152,

'king': 76,

'increasing': 69,

'constant': 24,

'50': 0,

'successive': 136}

We will now have a look at the newly created array, which is a vectorized representation of the sentences, in this case we have used the BOW technique to create the vectorized representation.

# output

new_corpus[0]

'finance stock also capital stock consists share ownership corporation company divided'

# This is just the first sentence from the corpus , it has 1 in 3rd column because 3rd index is allocated to 'also'(similarly others are marked as well). There are total 153 columns.

# output

X[0].toarray()

array([[0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1,

0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0,

0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]])

TF-IDF

As we saw the problems with BOW is that it does not capture the semantic meaning of the sentences. Say for example there are two sentences.

The food is good.

The food is not good.

They mean very different things, but if after remvoing the stopwords we construct the BOW matrix , the first sentence has a vector of the form [1, 1, 0] and the second sentence has the form [1, 1, 1] (here we get a vector of 3 because there are 3 vocabulary namely food, good, not) . Therefore, the cosine similarity is equal to 0.81 which says they are very similar which is actually very untrue . This a major drawback of the Bag of Words (BOW) approach. The problem is it gives equal weightage to all words, so it does not understand the importance of words in a sentence . Like ‘NOT’ played a very important role in the second sentence (above) which was not understood because it got the same weight (i.e. 1) as that of other words.

So now we see TF-IDF (Term Frequency and Inverse Document Frequency) to overcome this issue . Here we give more weights to those words which are rare, in the corpus and the common words which are there in almost all sentences are given lower weights.

A basic implementation of cosine similarity between two vectors

import numpy as np

def cosine(a, b):

cos = np.dot(a, b)/(np.linalg.norm(a) * np.linalg.norm(b))

return cos

# Cosine similarity between the two vectors.

cosine([1, 1, 0], [1, 1, 1])

0.8164965809277259

Take 3 sentences which form our corpus .

The boy is good.

The girl is good.

Boy and Girl good .

After removing the stopwords and lowering cases, the sentences becomes:

- boy good

- girl good

- boy girl good

Now to create the TF and IDF , we use the above formulas:

TF

We create the above table using the TF formula written above , the word column is made using the total vocabulary and each row represents the ratio of number of times that word appeared in the sentence to the total number of words actually present in the sentence . For example , the word "good" appears once in sentence 1 and total words in sentence 1 is 2 . So therefore the TF for row 1 column 1 becomes 1/2.

Now we create the IDF

This is created using the formula of IDF above. Here we take all vocabulary and take logarithm of ratio between total number of sentences in the corpus and the number of sentences in which that particular word appeared. For example : "good" the value is log(3/3) because, there are in total 3 sentences in the entire corpus(so numerator is 3) and "good" appears in all three of them so the denominator is also 3.

Now the next task is to multiply IDF * TF , where we take each column of TF and multiply element wise with IDF. So we take say at first sentence 1 column from TF which has values [1/2, 1/2, 0] for good, boy, girl respectively and multiply it with IDF’s good boy and girl values [1/2*log(3/3), 1/2*log(3/2), 0*log(3/2)] and as a result we get the below table’s first row.

Now some amount of semantic info is getting captured.

Advantages

- Intuitive

- Word Importances are captured.

Disadvantages

- Sparsity remains

- Out of Vocabulary

# TF-IDF

from sklearn.feature_extraction.text import TfidfVectorizer

tf_idf = TfidfVectorizer()

Y = tf_idf.fit_transform(corpus)

# read about - "max_features" parameters in TfidfVectorizer()

corpus[0]

' in finance stock also capital stock consists of the shares of which ownership of a corporation or company is divided '

# Features are same as in BOW (feature space remains same)

Y[0].toarray()

array([[0. , 0. , 0. , 0. , 0.20313319,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.25619636, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.22631273, 0. , 0.29831497, 0. , 0.25619636,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.29831497,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.29831497, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.1543105 , 0. , 0. ,

0. , 0. , 0. , 0.16818137, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.42622655, 0. , 0. , 0. , 0. ,

0. , 0. , 0.18419413, 0. , 0. ,

0. , 0.20313319, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.20313319, 0. , 0. ,

0. , 0. , 0. , 0. , 0.30862099,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.11219189,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.22631273, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. ]])

If you are interested in downloading or accessing the code, the link to notebook has been given below.

https://www.kaggle.com/neelakashchatterjee/detailed-nlp-basics-and-hands-on-implementation

That’s all for today, I will continue to discuss about the remaining basics of NLP from here in my next post. See ya geeks!!

Credits :

- I would like thank Krish Naik (Founder of iNeuron) a lot, I’ve learnt a lot of these concepts from his youtube channel.

- My colleauges at Cloudcraftz Solution Pvt. Ltd. , who have helped me in understanding the practical usage of the concepts mentioned above.