Hello Readers, today we are going to continue our discussion on the basics of Natural Language Processing followed by a simple case study where we will try to apply all that we have learned so far. This is a continuation of our previous post NLP Basics Part-1.

The things that we are going to discuss today are some state-of -the-art techniques used in modern NLP tasks. So without further ado, let’s get started.

Note: Please do let me know if you find any part of this post as inappropriate or misleading.

Word Embeddings

It is a technique of converting words into vectors.

Word Embeddings can be of two types:

- Based on Count or Frequency:

- BOW

- TF-IDF

- One Hot Encodings

- Deep Learning Based

- Word2Vec

- CBOW

- SKIP GRAMS

Advantages of Word2Vec:

- Reduces Sparsity

- Limited Dimension

- Semantic Meaning is captured

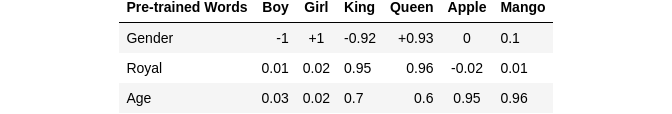

Say there are 6 features (using vocabulary we get those):

These pre-trained words are decided by the DL model, there can be as many as hundreds, thousands or million such words. Now each word on the pre-trained side is compared with the features (such as boy, girl, king , queen, apple, mango) and assigns a value, this value is assigned by the DL model as well.

Say in above example:

- With respect to gender — model assigns boy -1 (with some logic we will see later) and since girl is exact opposite of boy so it gets a value +1 . King gets a value -0.92 and Queen is of opposite gender so it gets a value +0.93 (value is a bit different that is decided by the model) , Apple gets a value of 0 because it has nothing to do with gender and so is Mango so it gets a value 0.1 almost negligible .

- Similarly, with respect to royal — boy gets a value 0.01 as it has very little relation with being royal, so is a girl so it gets a value of 0.02 but a King is something royal so it get’s a value of 0.95 and so is a Queen and it gets a value of 0.96 . Royal has very little to do with Apple so it get’s a value of -0.02 (negative sign because may be the model thinks it’s relation with Royal is absolutely opposite) whereas Mango get’s 0.01 which is also negligible but is positive since we consider Mango as king of all fruits may be that is the reason it gets a positive value.

- Basically we with respect to all words in the pre-trained model we compare our features and give out a score . Here we have used only three words but in general there are many .

Say : from above example we get boy vector as [-1, 0.01, 0.03]

girl as [1, 0.02, 0.02] king as [-0.92, 0.95, 0.7] queen as [0.93, 0.96, 0.95]

So, if we do King — Man + Girl we get Queen (just do vector addition and substraction you’ll see the resultant vector is similar or very close to Queen vector)

Note — Similarity can be checked using metrics like cosine similarity and cosine distance.

Formula: cosine-similarity = 1 — cosine-distance

Now the question arises - How are these models getting trained and giving us this score with respect to these pre-trained words.

Continuous Bag of Words

Let the sentence under consideration be :

KRISH CHANNEL IS RELATED TO DATA SCIENCE.

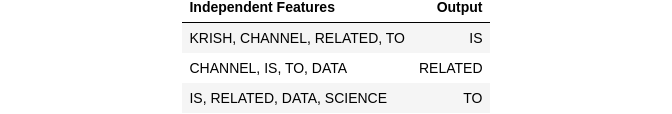

In continuous bag of words, we have a window size. Let’s say window = 5.

Choose a window that is odd, so that with each window we get a middle word and that word is our output. For example:

For a moving window size 5, our first window becomes “KRISH CHANNEL IS RELATED TO” , so the middle word is “IS”, that becomes our O/P and rest two words on either side becomes our features “KRISH”, “CHANNEL”, “RELATED”, “TO”.

Similarly we do it for others as well by sliding the window.

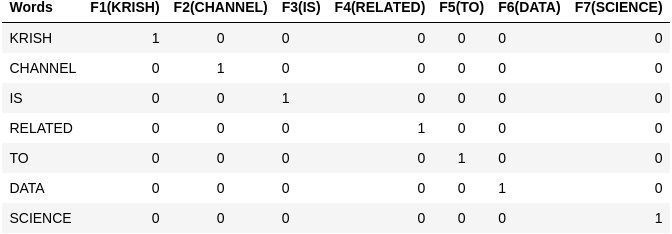

Now based on the vocabulary of this sentence which is (KRISH, CHANNEL, IS, RELATED, TO, DATA, SCIENCE) we create bag of words for each word. So number of features is 7 and row names are the individual words (since all words are unique here, features and each row has same name)

BOW:

so vector KRISH is [1,0,0,0,0,0,0] and so on.

Now the first Document is — [“KRISH”, “CHANNEL”, “RELATED”, “TO”] AND O/P is [“IS”] .

So now we pass this document through a Artificial Neural Network with the features as [“KRISH”, “CHANNEL”, “RELATED”, “TO”] and [“IS”] as labels.

So for example each word in the features are represented by 7 values in BOW like KRISH is [1,0,0,0,0,0,0]. So each word is passed as a set of 7 neurons and since there are 4 words as input, the input layer becomes 7×4 neurons . The Hidden Layer has 5 Neurons as our window size is 5 and Output Layer has 7 Neurons with softmax as activation since we already told we represent in this case each letter by 7 values to form a vector and therefore we have 7 neurons at output. In this first document “IS” is our label so to represent “IS” we need 7 values.

Note — each neuron at the output layer will give out a vector of 5 values, because our window is 5.

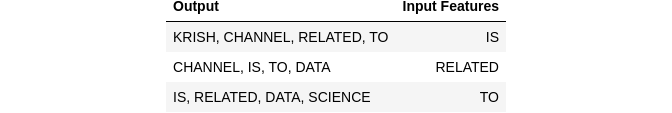

SKIPGRAM

Only difference is our O/P in CBOW becomes our input and I/P in CBOW becomes our O/P here .

As far as the neural network is concerned, just use the reversed version of the CBOW neural network.

import nltk

import warnings

warnings.filterwarnings('ignore')

import re

from nltk.stem import PorterStemmer, WordNetLemmatizer

from nltk.corpus import stopwords

# Used for creating Bag of Words.

from sklearn.feature_extraction.text import CountVectorizer

# A tokenizing algorithm that tokenizes paragraphs using an unsupervised algorithm.

nltk.download('punkt')

# Stopwords in English

nltk.download('stopwords')

nltk.download('wordnet')

nltk.download('omw-1.4')

from gensim.models import KeyedVectors, Word2Vec

import gensim.downloader as api

import gensim

[nltk_data] Downloading package punkt to

[nltk_data] /home/cloudcraftz/nltk_data...

[nltk_data] Package punkt is already up-to-date!

[nltk_data] Downloading package stopwords to

[nltk_data] /home/cloudcraftz/nltk_data...

[nltk_data] Package stopwords is already up-to-date!

[nltk_data] Downloading package wordnet to

[nltk_data] /home/cloudcraftz/nltk_data...

[nltk_data] Package wordnet is already up-to-date!

[nltk_data] Downloading package omw-1.4 to

[nltk_data] /home/cloudcraftz/nltk_data...

[nltk_data] Package omw-1.4 is already up-to-date!

Loading the word2vec model from gensim, which has been trained on Google News Dataset, using 3 million words and phrases

w2v = api.load('word2vec-google-news-300')

Using the above model we can do various things, below we will see the most similar words with respect to a given word

# We get the most similar words with their scores arranged in a descending manner .

w2v.most_similar('cricket')

[('cricketing', 0.8372225761413574),

('cricketers', 0.8165745735168457),

('Test_cricket', 0.8094819188117981),

('Twenty##_cricket', 0.8068488240242004),

('Twenty##', 0.7624265551567078),

('Cricket', 0.75413978099823),

('cricketer', 0.7372578382492065),

('twenty##', 0.7316356897354126),

('T##_cricket', 0.7304614186286926),

('West_Indies_cricket', 0.6987985968589783)]

Average Word2Vec

We have seen in Word2Vec the vector dimension of each word is of the size of the pre-trained words the model is using . In above example we saw boy was represented as [-1, 0.01, 0.3] . Now say there is a sentence Boy is cute, there are 3 words each word is described by a vector of size 300, therefore 900 . This size keeps increasing as we use more complex model and sentences. To reduce this we use average word2vec , what it does is it simply takes the average of each row i.e. say the above sentence boy = [-1, -1, 0], is = [1, 1, 1] and cute = [0, 1, 0] then it gets converted into a single vector as [(-1+1+0)/3, (-1+1+1)/3, (0+1+0)/3] , this is our new vector .

Text Classification

Dataset can be downloaded from Kaggle — Spam Classification

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

data = pd.read_csv('spam.csv', encoding="ISO-8859-1").iloc[:, :2]

data.columns = ['Label', 'Message']

data['Label'].value_counts()

ham 4825

spam 747

Name: Label, dtype: int64

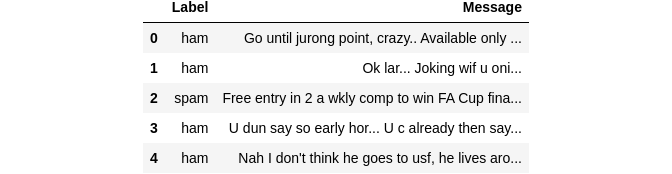

Let’s see how the messages look like .

data.head()

data['Message'].iloc[2]

"Free entry in 2 a wkly comp to win FA Cup final tkts 21st May 2005. Text FA to 87121 to receive entry question(std txt rate)T&C's apply 08452810075over18's"

We will now move on to pre-process the dataset.

lematizer = WordNetLemmatizer()

corpuses = []

for words in data['Message'].values:

words = re.sub('[^a-zA-Z0-9]', ' ', words)

words = words.lower()

sentence = nltk.word_tokenize(words)

lemma = [lematizer.lemmatize(new) for new in sentence if new not in stopwords.words('english')]

sentence = ' '.join(lemma)

if sentence == '':

sentence = 'stopwords only'

corpuses.append(sentence)

Below we take each document at a time and tokenize each word in that sentence.

word_sent = []

for s in corpuses:

w = nltk.word_tokenize(s)

word_sent.append(w)

Now, our task is to choose a window size (let’s take it as 5 for the moment), there are various other parameters which you may explore.For now we are going to keep it simple and use only window and min_count, where min_count takes an integer, which says any word with a frequency lower than min_count will be ignored.

wtwov = gensim.models.Word2Vec(word_sent, window=5, min_count=1)

Average Word2Vec Fucntion

def avgwtovec(docs):

document = np.mean([wtwov.wv[wo] for wo in docs if wo in wtwov.wv.index_to_key], axis=0)

return document.tolist()

Below we use the above created average word 2 vec function to create a vectorized representation of the sentences.

word2vec = [avgwtovec(sents) for sents in word_sent]

dataset = pd.DataFrame()

for i in range(len(word2vec)):

dataset = dataset.append(pd.DataFrame(word2vec[i]).T)

Now, once our feature space is ready we are ready to build a predictive model . We will use a XGClassifier to train our model.

import xgboost

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, recall_score, precision_score, plot_confusion_matrix, classification_report

labels = [1 if l == 'ham' else 0 for l in data['Label'].values]

labels = np.array(labels)

# Train Test Split

X_train, X_test, y_train, y_test = train_test_split(dataset, labels, test_size=0.3, random_state=1)

model_xg = xgboost.XGBClassifier()

model_xg.fit(X_train, y_train)

XGBClassifier(base_score=0.5, booster='gbtree', callbacks=None,

colsample_bylevel=1, colsample_bynode=1, colsample_bytree=1,

early_stopping_rounds=None, enable_categorical=False,

eval_metric=None, gamma=0, gpu_id=-1, grow_policy='depthwise',

importance_type=None, interaction_constraints='',

learning_rate=0.300000012, max_bin=256, max_cat_to_onehot=4,

max_delta_step=0, max_depth=6, max_leaves=0, min_child_weight=1,

missing=nan, monotone_constraints='()', n_estimators=100,

n_jobs=0, num_parallel_tree=1, predictor='auto', random_state=0,

reg_alpha=0, reg_lambda=1, ...)

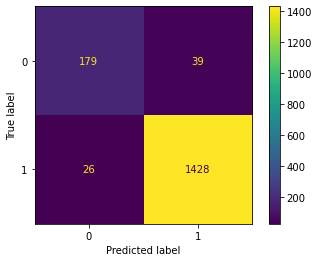

Lets see how we did on our test set .

predicts = model_xg.predict(X_test)

accuracy_score(y_test, predicts)

0.9611244019138756

recall_score(y_test, predicts)

0.9821182943603851

precision_score(y_test, predicts)

0.9734151329243353

plot_confusion_matrix(model_xg, X_test, y_test)

plt.show()

print(classification_report(y_test, predicts))

precision recall f1-score support

0 0.87 0.82 0.85 218

1 0.97 0.98 0.98 1454

accuracy 0.96 1672

macro avg 0.92 0.90 0.91 1672

weighted avg 0.96 0.96 0.96 1672

The prediction seems quite decent, with a good accuracy as well as F1-Score. This was a very basic case study and implementation of the concepts that we studied today.

Word Embedding Layer

- Take the sentences

- One Hot Encode them

- Padding (Pre or Post) this is done because in one hot encoding the sentences are encoded based on words in a sentence, which may vary from one sentence to the other , therefore to make them all equal we add extra 0’s on the front or towards the end .

from tensorflow.keras.preprocessing.text import one_hot

2022-11-02 11:23:20.263421: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-11-02 11:23:20.518424: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-11-02 11:23:20.518452: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2022-11-02 11:23:20.542248: E tensorflow/stream_executor/cuda/cuda_blas.cc:2981] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2022-11-02 11:23:21.277570: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory

2022-11-02 11:23:21.277650: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory

2022-11-02 11:23:21.277667: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

# sentences with varying lengths

sent=['the glass of milk',

'the glass of juice',

'the cup of tea',

'I am a good boy',

'I am a good developer',

'understand the meaning of words',

'your videos are good']

We have to specify a vocabulary size ourselves .So we set the size manually so that each word is represented by a vector of size vocab_size

# Fixed vocab size

vocab_size = 10000

Below we are basically passing each sentence in our corpus through one-hot encoder to create the vectors, one_hot takes two argument here . One is the sentence itself and the vocab_size that is what is the length of the representing vector .

It returns a array for every sentence with a set of values . Each value in a array represents the index in which it was “1”, because with a vocabulary of 10000 we know in one hot encoding all other 9999 positions will be marked “0” and only the position of the word under consideration will be given “1”. So it returns only that position.

Like say in below encoding, in sentence one:

- “the glass of milk” is given by

[6495, 6367, 2709, 7114]where the word “the” is “1” at index 6495 and all other 9999 positions are “0”. Similarly the word “glass” is “1” at position at 6367 and all other 9999 as “0”. So rather than giving us the entire representation of 10000 values, it only gives us the position number where it was “1” in that vector.

layer = [one_hot(sen, vocab_size) for sen in sent]

layer

[[1020, 7825, 7486, 6986],

[1020, 7825, 7486, 6611],

[1020, 8210, 7486, 6831],

[3055, 9005, 8420, 7388, 1308],

[3055, 9005, 8420, 7388, 6231],

[8255, 1020, 8441, 7486, 8499],

[4567, 3049, 2355, 7388]]

Word Embedding Representation

Notice all the sentences are of different length, but ML or DL models only takes equal number of features for each document or each obseravtion. So to do that we will do padding.

from tensorflow.keras.layers import Embedding

from tensorflow.keras.models import Sequential

from tensorflow.keras.preprocessing.sequence import pad_sequences

So what we do is just take the length of the longest sentence or anything more than that and use that as maxlen in pad_sequences that will make all sentences of equal length, you can use pre or post that is upto our choice. If we use pre extra “0” are put in front of the number else at the end .

Now since the length of our longest sentence is 5 we took a value 8 (which is greater than 5) and made all arrays of equal length.

sent_length = 8

embedded_docs = pad_sequences(layer, padding='pre', maxlen=sent_length)

print(embedded_docs)

[[ 0 0 0 0 1020 7825 7486 6986]

[ 0 0 0 0 1020 7825 7486 6611]

[ 0 0 0 0 1020 8210 7486 6831]

[ 0 0 0 3055 9005 8420 7388 1308]

[ 0 0 0 3055 9005 8420 7388 6231]

[ 0 0 0 8255 1020 8441 7486 8499]

[ 0 0 0 0 4567 3049 2355 7388]]

Now we have got our embedding layer with each sentence with equal array size, now we convert each word in a sentence into word2vec for that we select number of features for converting each word to word2vec (see word2vec explanation above)

# Number of features used for converting each word to word2vec.

dim = 10

Below we create our own Word2Vec model, using the Embedding Layer. We just need to pass the vocabulary size we want to use (here 10000) the number of features we want to create word2vec of each word and the max length that is what we want for representing each sentence with padding.

model = Sequential()

model.add(Embedding(vocab_size, dim, input_length=sent_length))

model.compile('adam','mse')

2022-11-02 11:23:21.831121: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2022-11-02 11:23:21.831362: W tensorflow/stream_executor/cuda/cuda_driver.cc:263] failed call to cuInit: UNKNOWN ERROR (303)

2022-11-02 11:23:21.831407: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (cloudcraftz-06): /proc/driver/nvidia/version does not exist

2022-11-02 11:23:21.832282: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

After training we see in the first sentence we got after padding , “the glass of milk” as [ 0, 0, 0, 0, 6495, 6367, 2709, 7114], and each number here is then converted into word2vec of size 10 as we specified the dim=10 .

Below when we predict embedded_docs[0] we get a array of size 8*10, beacuse the sentence is represeneted by 8 values (with padding) and each value is represented by 10 values.

embedded_docs[0]

array([ 0, 0, 0, 0, 1020, 7825, 7486, 6986], dtype=int32)

model.predict(embedded_docs[0])

WARNING:tensorflow:Model was constructed with shape (None, 8) for input KerasTensor(type_spec=TensorSpec(shape=(None, 8), dtype=tf.float32, name='embedding_input'), name='embedding_input', description="created by layer 'embedding_input'"), but it was called on an input with incompatible shape (None,).

1/1 [==============================] - 0s 97ms/step

array([[-0.00123087, 0.03647018, 0.03407898, 0.0381111 , 0.04813857,

0.00189596, 0.01516304, -0.01877182, 0.01922247, -0.02681679],

[-0.00123087, 0.03647018, 0.03407898, 0.0381111 , 0.04813857,

0.00189596, 0.01516304, -0.01877182, 0.01922247, -0.02681679],

[-0.00123087, 0.03647018, 0.03407898, 0.0381111 , 0.04813857,

0.00189596, 0.01516304, -0.01877182, 0.01922247, -0.02681679],

[-0.00123087, 0.03647018, 0.03407898, 0.0381111 , 0.04813857,

0.00189596, 0.01516304, -0.01877182, 0.01922247, -0.02681679],

[ 0.02948665, 0.02786222, 0.00702927, -0.03976067, 0.01500887,

-0.00735202, -0.01333605, 0.00872318, -0.04330127, 0.01748199],

[-0.04851049, -0.018426 , 0.01364973, -0.04094089, 0.01301808,

0.03131111, 0.02555818, 0.01247604, 0.03791109, -0.0394867 ],

[-0.04684344, 0.00969238, -0.018878 , -0.03609868, -0.03488101,

-0.00920697, 0.009779 , 0.00690002, -0.00776314, 0.03579214],

[-0.04864953, -0.02823101, 0.04531744, -0.04716897, 0.04260788,

0.03348962, -0.04699815, -0.04278564, -0.01976631, -0.00438765]],

dtype=float32)

If you want to access the notebook, please visit the link given below :

NLP Part-2 .

That’s all for today, will see you next time. See ya geeks!!

Credits :

- I would like thank Krish Naik (Founder of iNeuron) a lot, I’ve learnt a lot of these concepts from his youtube channel.

- My colleauges at Cloudcraftz Solution Pvt. Ltd. , who have helped me in understanding the practical usage of the concepts mentioned above.